Advances in AI are reshaping the interaction between humans and intelligent machines.

As these systems become more complex, it is increasingly important to design them in alignment with human goals and intentions. However, the growing complexity and fast-paced development renders the traditional user-centered design approach unsustainable. Experimental user testing and qualitative studies are often overlooked due to their slow, resource-intensive nature. To address this, our research uses data-driven analytics and machine learning-based user modeling and simulation to make user-centered design more efficient and to better understand how humans interact with intelligent computing technology.

Computational Rational User Modeling

Computational rational theories propose that users act to maximize expected utility within their constraints. In human–computer interaction, this means users seek optimal outcomes given their internal (e.g., cognitive or perceptual) and external (e.g., environmental or design-related) bounds. Users adapt their behavioral strategies accordingly, which can be approximated with reinforcement learning to generate testable predictions of interaction behavior. Although machine learning is used to learn behavioral policies and make predictions, the formulated bounds limit the space of computable interaction strategies of the agent so that they represent human-like behavior.

Biomechanical Forward Simulation

Movement is the only way humans can influence the world. With the exception of brain–computer interfaces, all human communication is ultimately mediated through muscle contractions. Simulated users that replicate human-like, dexterous interactions with technology offer great potential to make user-centered design more efficient, rigorous, and predictable. By using forward simulation, these models produce biomechanically realistic motion, allowing accurate predictions of user performance and muscle effort based on musculoskeletal dynamics. Our work focuses on building biomechanical models that simulate human–computer interaction to inform and improve user interface design.

Data-Driven Driver Modeling

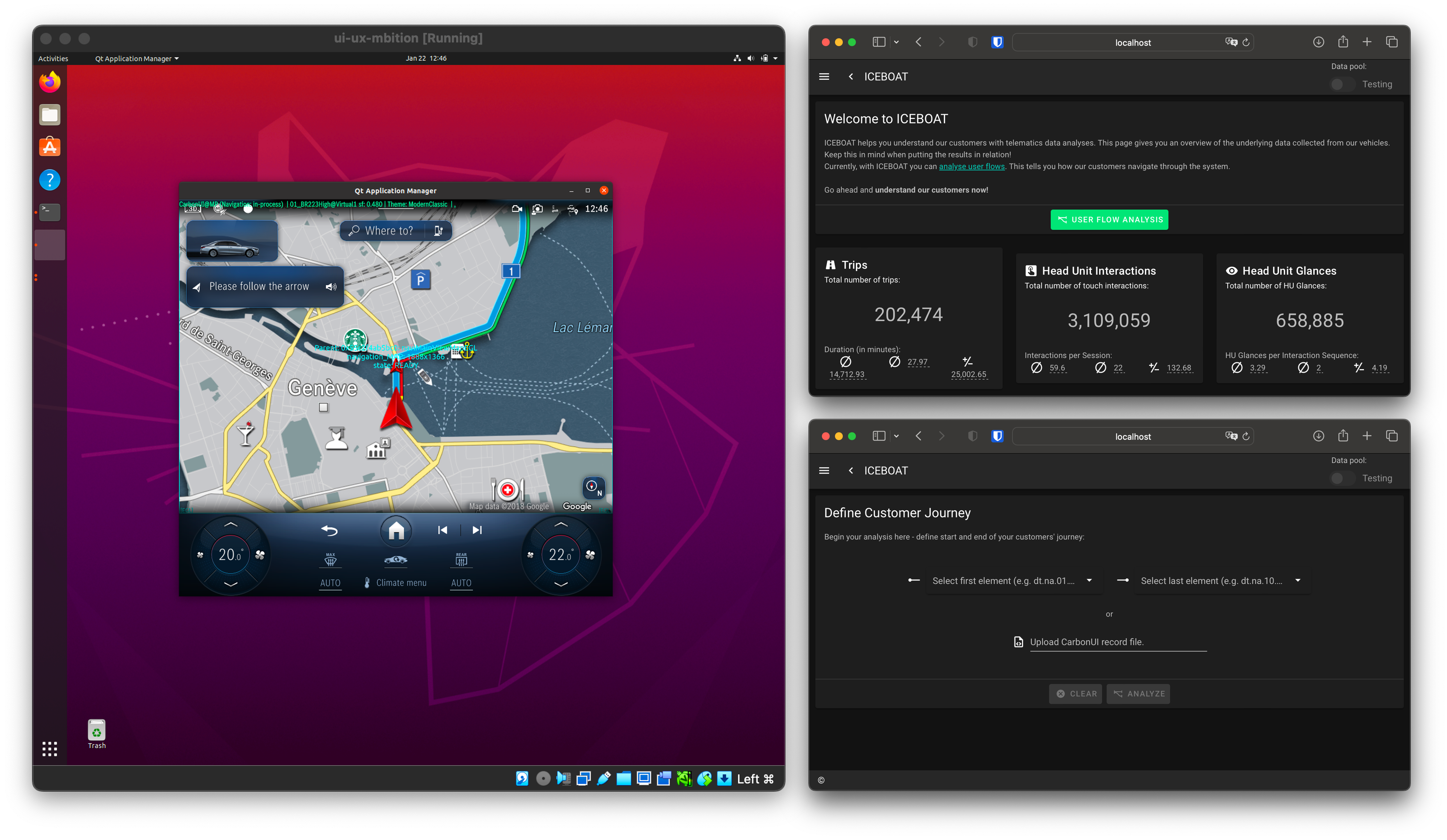

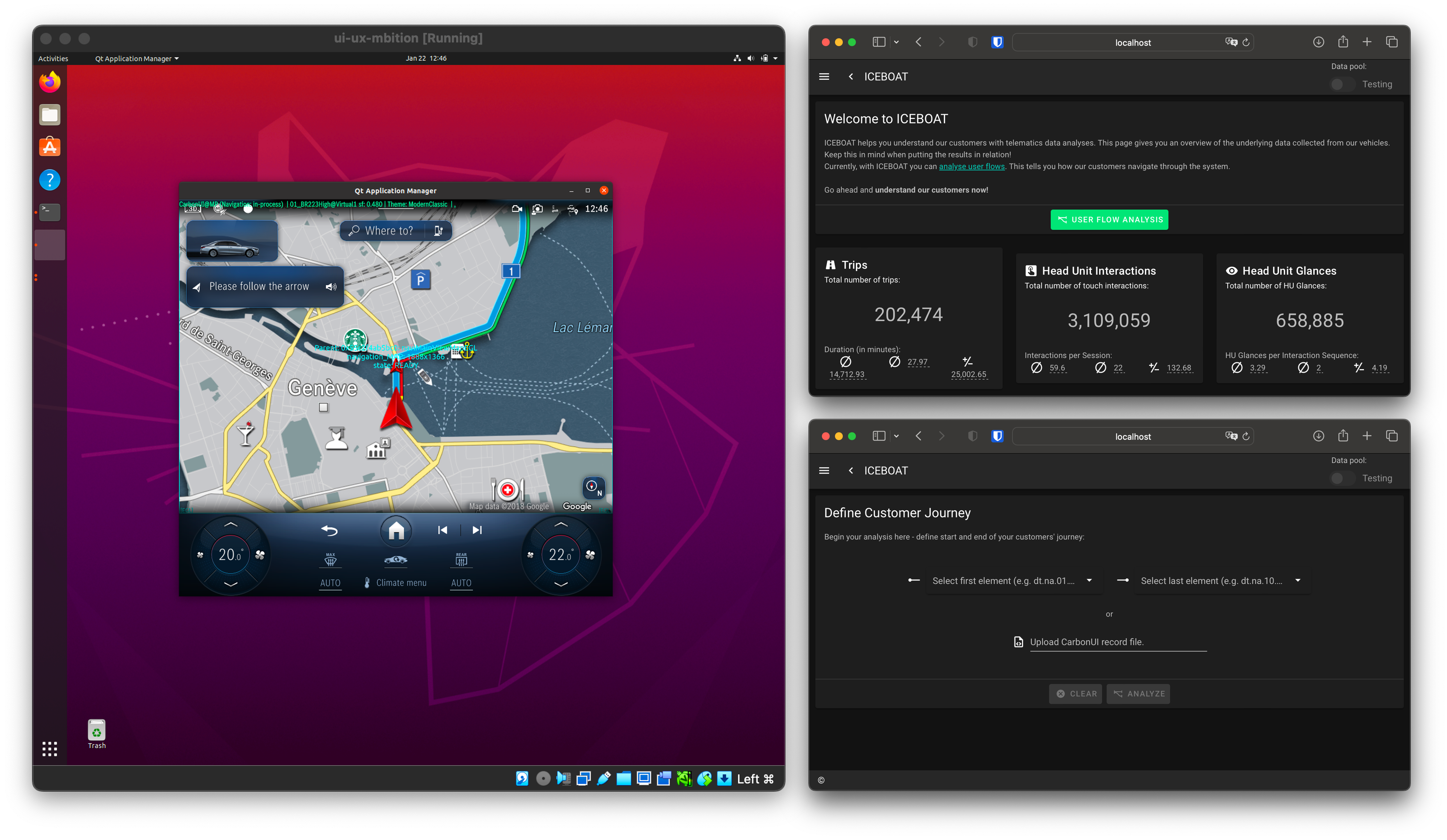

The goal of CIAOs research is to better understand how drivers allocate their resources while engaging in secondary tasks. A deep understanding of human multitasking and how distraction affects driving behavior and vice versa facilitates the design of safe automotive HMIs. We combine large-scale user interaction data with driving data and glance behavior data to model driver’s distraction, behavioral adaption, and sensitivity to the driving context. While we apply statistical modeling to evaluate new design artifacts, we also apply various machine learning approaches to predict human interaction behavior to not only better understand the interaction itself but also to evaluate new interfaces already before the first user study is run.

Mixed-Reality Driving Simulator

To evaluate the computational models with human participants we to aim mimic the real driving environment as closely as possible. Our mixed-reality driving simulator allows us to create an immersive driving and interaction experience. We exploit the immersiveness of virtual reality to simulate the driving environment but keep the vehicle interior real such that secondary task engagements (e.g., interactions with the center stack touchscreen) feel as real as possible. The simulator’s code is open source, and we welcome anyone who wants to use it or collaborate with us: GitHub Repository