Publications

2025

-

-

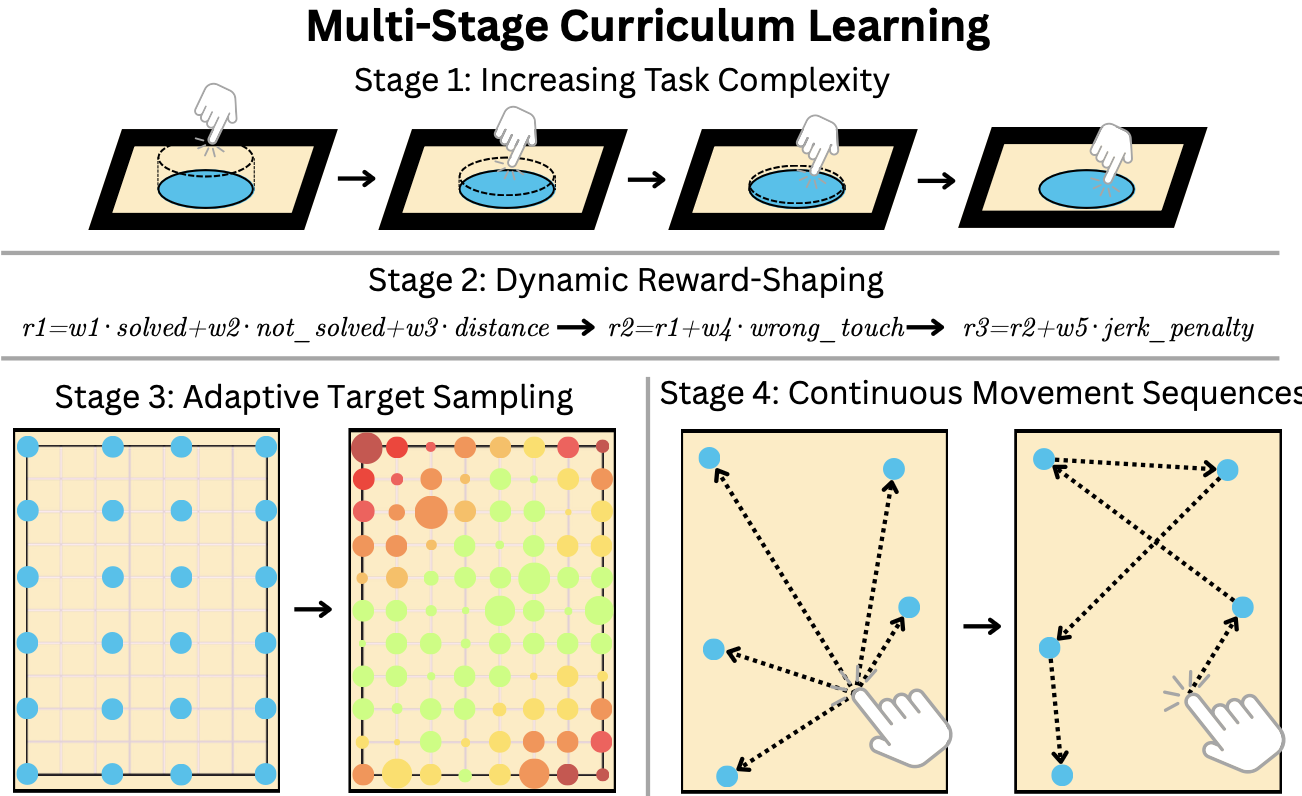

Increasing Interaction Fidelity: Training Routines for Biomechanical Models in HCI

Michal Patryk Miazga, Patrick Ebel

The 38th Annual ACM Symposium on User Interface Software and Technology (UIST Adjunct '25) (2025)

ABSTRACT

Biomechanical forward simulation holds great potential for HCI, enabling the generation of human-like movements in interactive tasks. However, training biomechanical models with reinforcement learning is challenging, particularly for precise and dexterous movements like those required for touchscreen interactions on mobile devices. Current approaches are limited in their interaction fidelity, require restricting the underlying biomechanical model to reduce complexity, and do not generalize well. In this work, we propose practical improvements to training routines that reduce training time, increase interaction fidelity beyond existing methods, and enable the use of more complex biomechanical models. Using a touchscreen pointing task, we demonstrate that curriculum learning, action masking, more complex network configurations, and simple adjustments to the simulation environment can significantly improve the agent's ability to learn accurate touch behavior. Our work provides HCI researchers with practical tips and training routines for developing better biomechanical models of human-like interaction fidelity.

-

-

Mind & Motion: Opportunities and Applications of Integrating Biomechanics and Cognitive Models in HCI

Arthur Fleig, Florian Fischer, Markus Klar, Patrick Ebel, Miroslav Bachinski, Per Ola Kristensson, Roderick Murray-Smith, Antti Oulasvirta

The 38th Annual ACM Symposium on User Interface Software and Technology (UIST Adjunct '25) (2025)

ABSTRACT

Computational models of how users perceive and act within a virtual or physical environment offer enormous potential for the understanding and design of user interactions. Cognition models have been used to understand the role of attention and individual preferences and beliefs on human decision making during interaction, while biomechanical simulations have been successfully applied to analyse and predict physical effort, fatigue, and discomfort. The next frontier in HCI lies in connecting these models to enable robust, diverse, and representative simulations of different user groups. These embodied user simulations could predict user intents, strategies, and movements during interaction more accurately, benchmark interfaces and interaction techniques in terms of performance and ergonomics, and guide adaptive system design. This UIST workshop explores ideas for integrating computational models into HCI and discusses use cases such as UI/UX design, automated system testing, and personalised adaptive interfaces. It brings researchers from relevant disciplines together to identify key opportunities and challenges as well as feasible next steps for bridging mind and motion to simulate interactive user behaviour.

-

-

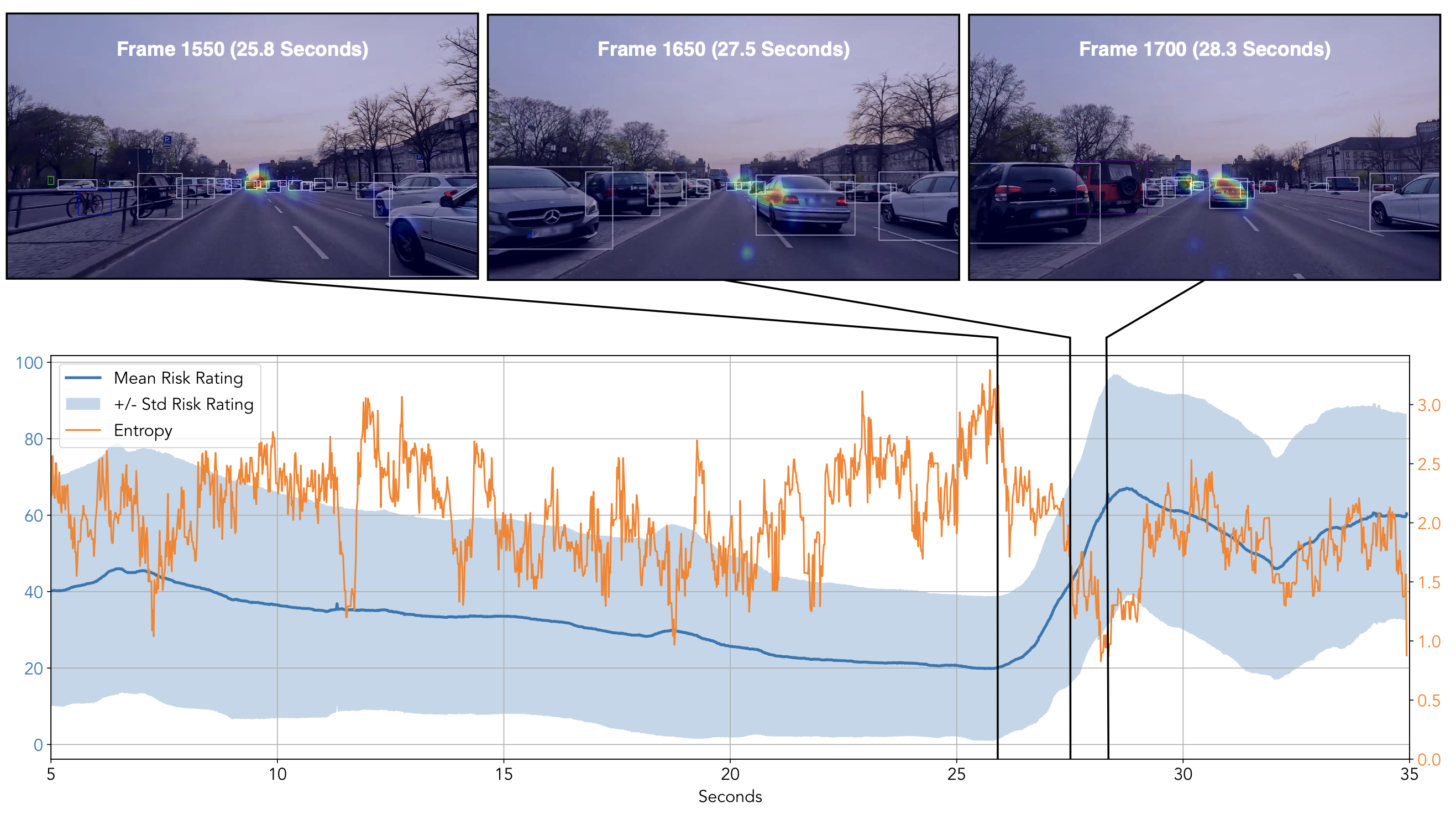

Visual Sampling Behavior Does not Explain Risk Perception: A Data-Driven xAI Investigation

Martin Lorenz, Jan Hilbert, Philipp Asteriou, Philipp Wintersberger, Patrick Ebel

17th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2025)

ABSTRACT

How do drivers perceive risk? Understanding what situations and factors cause drivers to perceive situations as critical can improve our understanding of road user behavior and inform automated driving technology. To investigate the factors that shape drivers’ risk perception, we conducted an eye-tracking study with 27 participants who watched dashcam videos and continuously rated the perceived risk of various driving situations. Using the resulting dataset, we developed a computer vision-based machine learning approach that generates explainable predictions of perceived risk from video and eye-tracking data. Our SHAP analysis reveals that the proximity of objects and number of cars in a scene are the most significant contributors to perceived risk. Most interestingly, while people tend to sample similar objects in critical situations, their risk perception remains highly personal making visual sampling behavior a weak predictor of perceived risk. Overall, our explanations reveal non-linear insights beyond previous work, suggesting that risk perception is not only shaped by visual input, but primarily by cognitive processes which is in line with theoretical models of situation awareness. The dataset, source code, and a comprehensive usage guide are publicly available.

-

-

Predicting Multitasking in Manual and Automated Driving with Optimal Supervisory Control

Jussi Jokinen, Patrick Ebel, Tuomo Kujala

Preprint (2025)

ABSTRACT

Modern driving involves interactive technologies that can divert attention, increasing the risk of accidents. This paper presents a computational cognitive model that simulates human multitasking while driving. Based on optimal supervisory control theory, the model predicts how multitasking adapts to variations in driving demands, interactive tasks, and automation levels. Unlike previous models, it accounts for context-dependent multitasking across different degrees of driving automation. The model predicts longer in-car glances on straight roads and shorter glances during curves. It also anticipates increased glance durations with driver aids such as lane-centering assistance and their interaction with environmental demands. Validated against two empirical datasets, the model offers insights into driver multitasking amid evolving in-car technologies and automation.

-

-

Evaluating Attention Management Systems for Dynamic Monitoring Tasks

Anton Gasse, Alexander Lingler, Martin Lorenz, Antti Oulasvirta, Philipp Wintersberger, Patrick Ebel

Adjunct Proceedings of the 4th Annual Symposium on Human-Computer Interaction for Work (2025)

ABSTRACT

In many work environments, operators must monitor multiple information sources, quickly identify critical situations, and respond appropriately. Attention Management Systems (AMS) are designed to help users coordinate attention in such contexts. However, while most AMS research has focused on multitasking and task-switching, their potential to guide gaze in dynamic monitoring remains unexplored. To address this, we evaluated two AMS designs in a controlled experiment (n= 15) using Senders’ Dial Task: Ambient

-

-

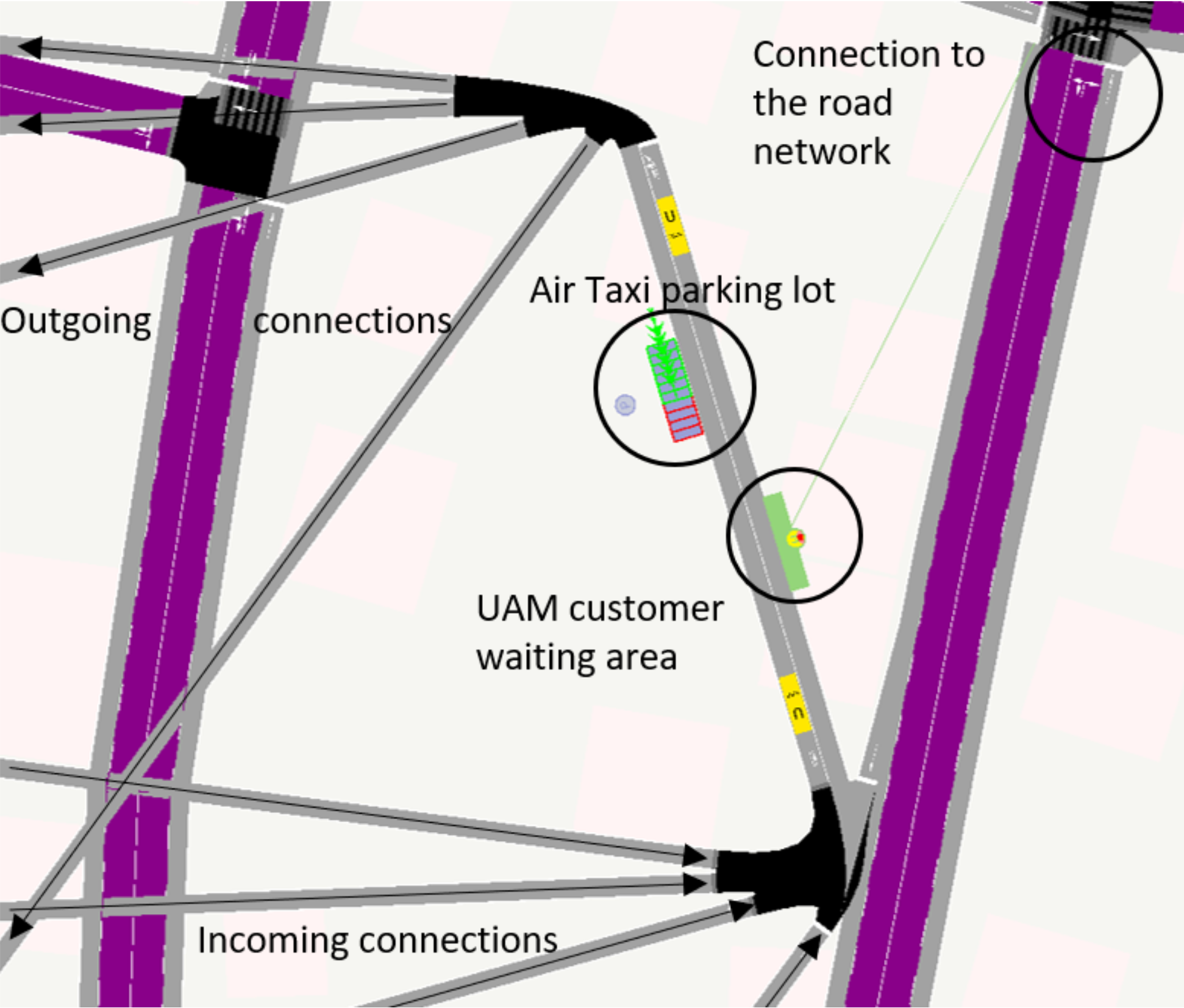

UAM-SUMO: Simulacra of Urban Air Mobility Using SUMO To Study Large-Scale Effects

Mark Colley, Julian Czymmeck, Pascal Jansen, Luca-Maxim Meinhardt, Patrick Ebel, Enrico Rukzio

Proceedings of the 2025 ACM/IEEE International Conference on Human-Robot Interaction (2025)

ABSTRACT

Urban Air Mobility (UAM) emerges as a potential solution to urban congestion. However, as it lacks integration with existing transportation systems, methods to study its impact are necessary. Traditional empirical approaches are insufficient to study large-scale effects in this not-yet-real context. We developed UAM-SUMO, an extension of the SUMO simulation platform, to simulate the impact of UAM on public transportation, particularly how air taxis affect traffic flow and mode choices. We detail the modifications to SUMO and the UAM operation parameters. We open-source our code at https://github.com/M-Colley/uam-sumo and present a proof-of-concept data collection and analysis for Ingolstadt, Germany.

2024

-

-

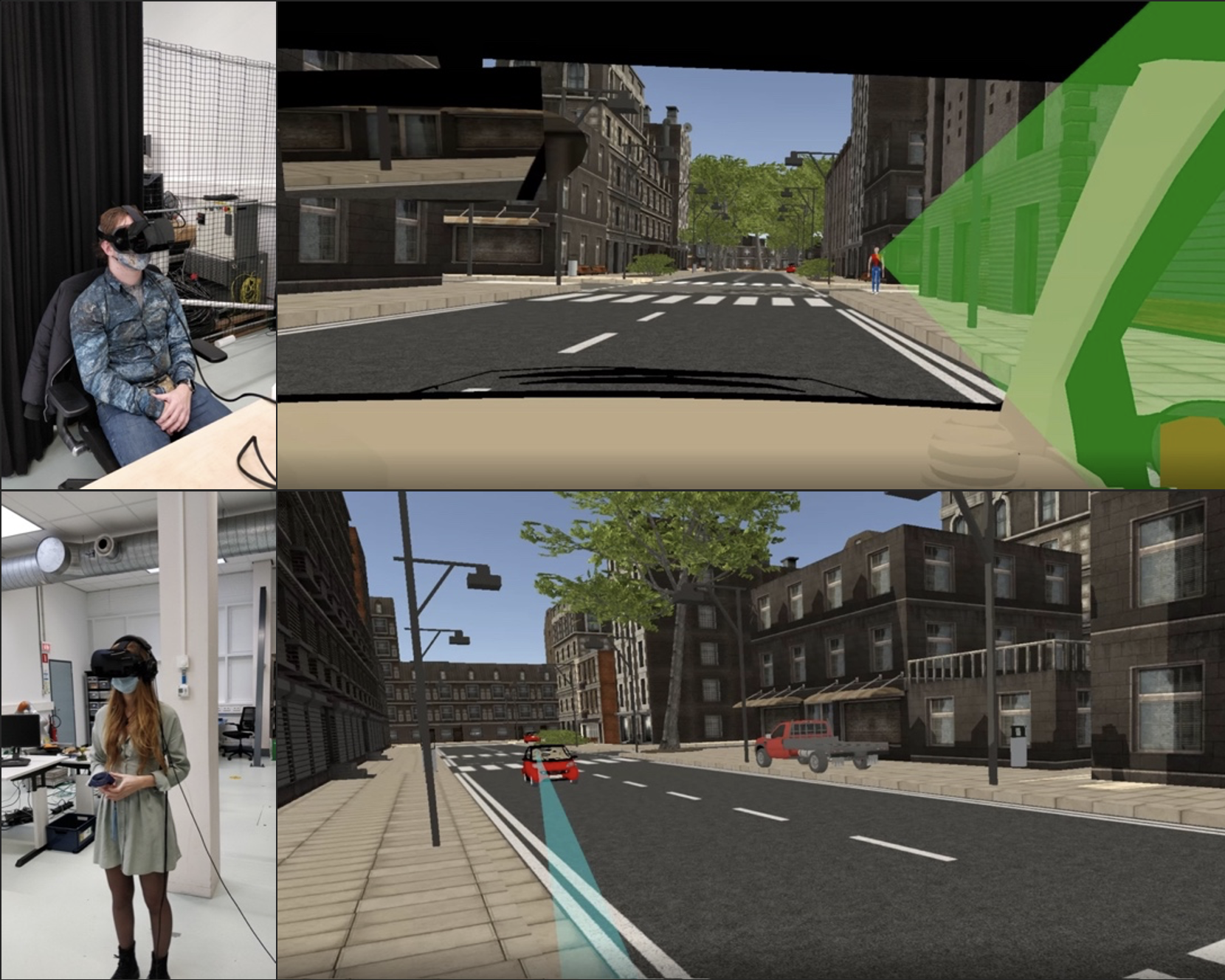

It Is Not Always Just One Road User: Workshop on Multi-Agent Automotive Research

Pavlo Bazilinskyy, Patrick Ebel, Francesco Walker, Debargha Dey, Tram Thi Minh Tran

16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2024)

ABSTRACT

In the future, roads will host a complex mix of automated and manually operated vehicles, along with vulnerable road users. However, most automotive user interfaces and human factors research focus on single-agent studies, where one human interacts with one vehicle. Only a few studies incorporate multi-agent setups. This workshop aims to (1) examine the current state of multi-agent research in the automotive domain, (2) serve as a platform for discussion toward more realistic multi-agent setups, and (3) discuss methods and practices to conduct such multi-agent research. The goal is to synthesize the insights from the AutoUI community, creating the foundation for advancing multi-agent traffic interaction research.

-

-

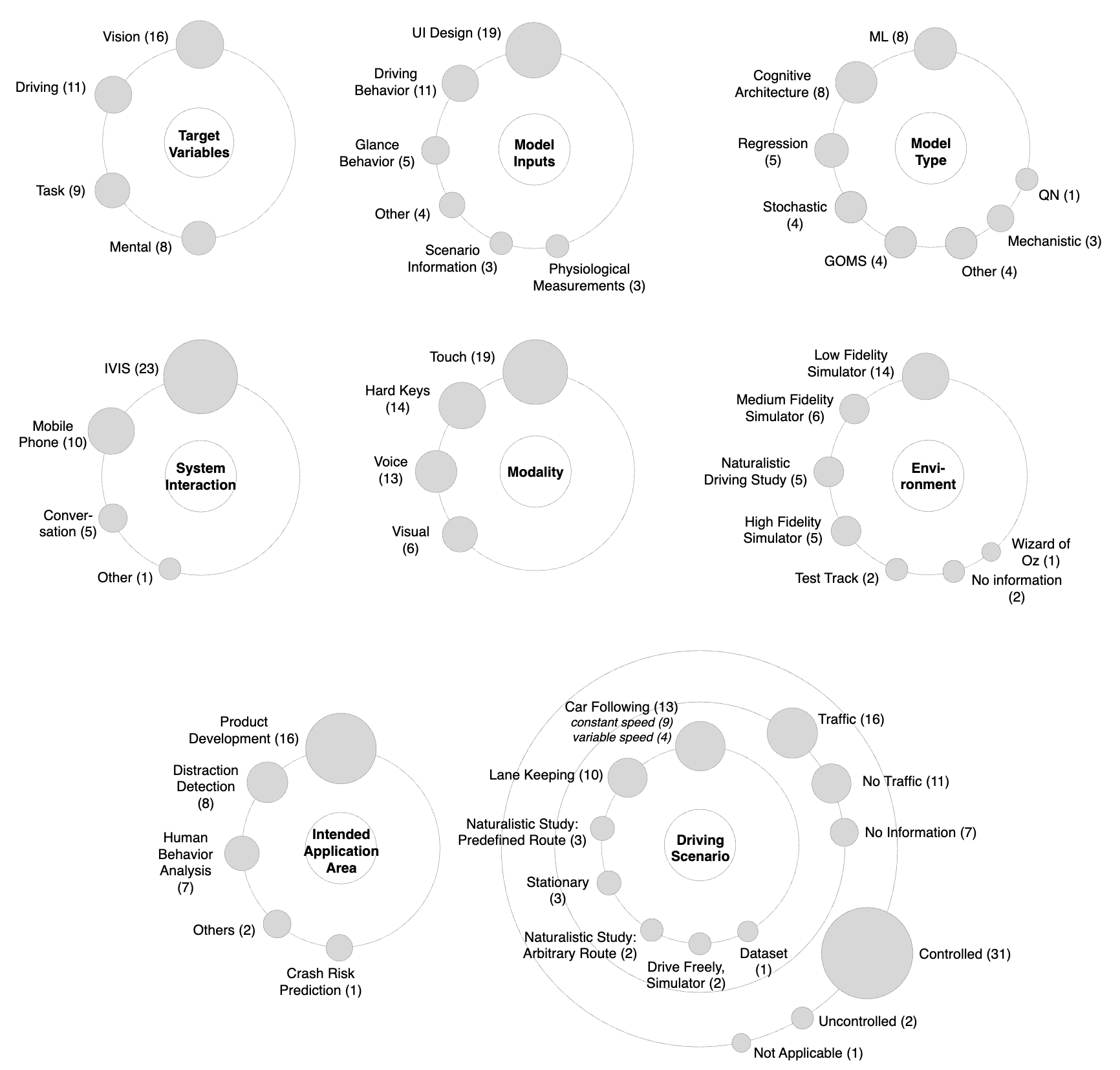

Computational Models for In-Vehicle User Interface Design: A Systematic Literature Review

Martin Lorenz, Tiago Amorim, Debargha Dey, Mersedeh Sadeghi, Patrick Ebel

16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2024)

ABSTRACT

In this review, we analyze the current state of the art of computational models for in-vehicle User Interface (UI) design. Driver distraction, often caused by drivers performing Non Driving Related Tasks (NDRTs), is a major contributor to vehicle crashes. Accordingly, in-vehicle User Interfaces (UIs) must be evaluated for their distraction potential. Computational models are a promising solution to automate this evaluation, but are not yet widely used, limiting their real-world impact. We systematically review the existing literature on computational models for NDRTs to analyze why current approaches have not yet found their way into practice. We found that while many models are intended for UI evaluation, they focus on small and isolated phenomena that are disconnected from the needs of automotive UI designers. In addition, very few approaches make predictions detailed enough to inform current design processes. Our analysis of the state of the art, the identified research gaps, and the formulated research potentials can guide researchers and practitioners toward computational models that improve the automotive User Interface (UI) design process.

-

-

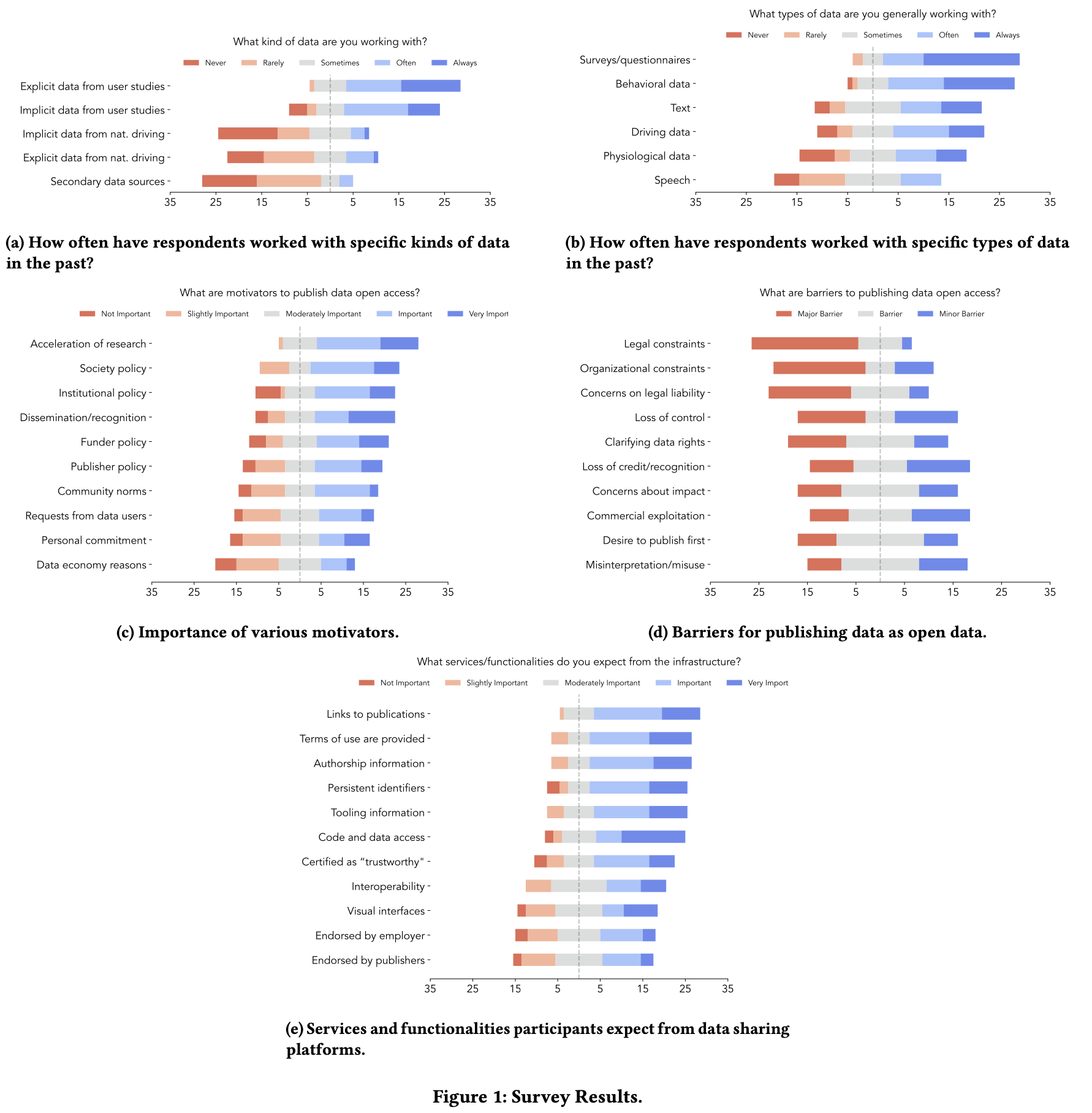

Changing Lanes Toward Open Science: Openness and Transparency in Automotive User Research

Patrick Ebel, Pavlo Bazilinskyy, Mark Colley, Courtney Michael Goodridge, Philipp Hock, Christian Janssen, Hauke Sandhaus, Aravinda Ramakrishnan Srinivasan, Philipp Wintersberger

16th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2024)

ABSTRACT

We review the state of open science and the perspectives on open data sharing within the automotive user research community. Openness and transparency are critical not only for judging the quality of empirical research, but also for accelerating scientific progress and promoting an inclusive scientific community. However, there is little documentation of these aspects within the automotive user research community. To address this, we report two studies that identify (1) community perspectives on motivators and barriers to data sharing, and (2) how openness and transparency have changed in papers published at AutomotiveUI over the past 5 years. We show that while open science is valued by the community and openness and transparency have improved, overall compliance is low. The most common barriers are legal constraints and confidentiality concerns. Although research published at AutomotiveUI relies more on quantitative methods than research published at CHI, openness and transparency are not as well established. Based on our findings, we provide suggestions for improving openness and transparency, arguing that the motivators for open science must outweigh the barriers. All supporting materials are freely available at: https://osf.io/zdpek/

-

-

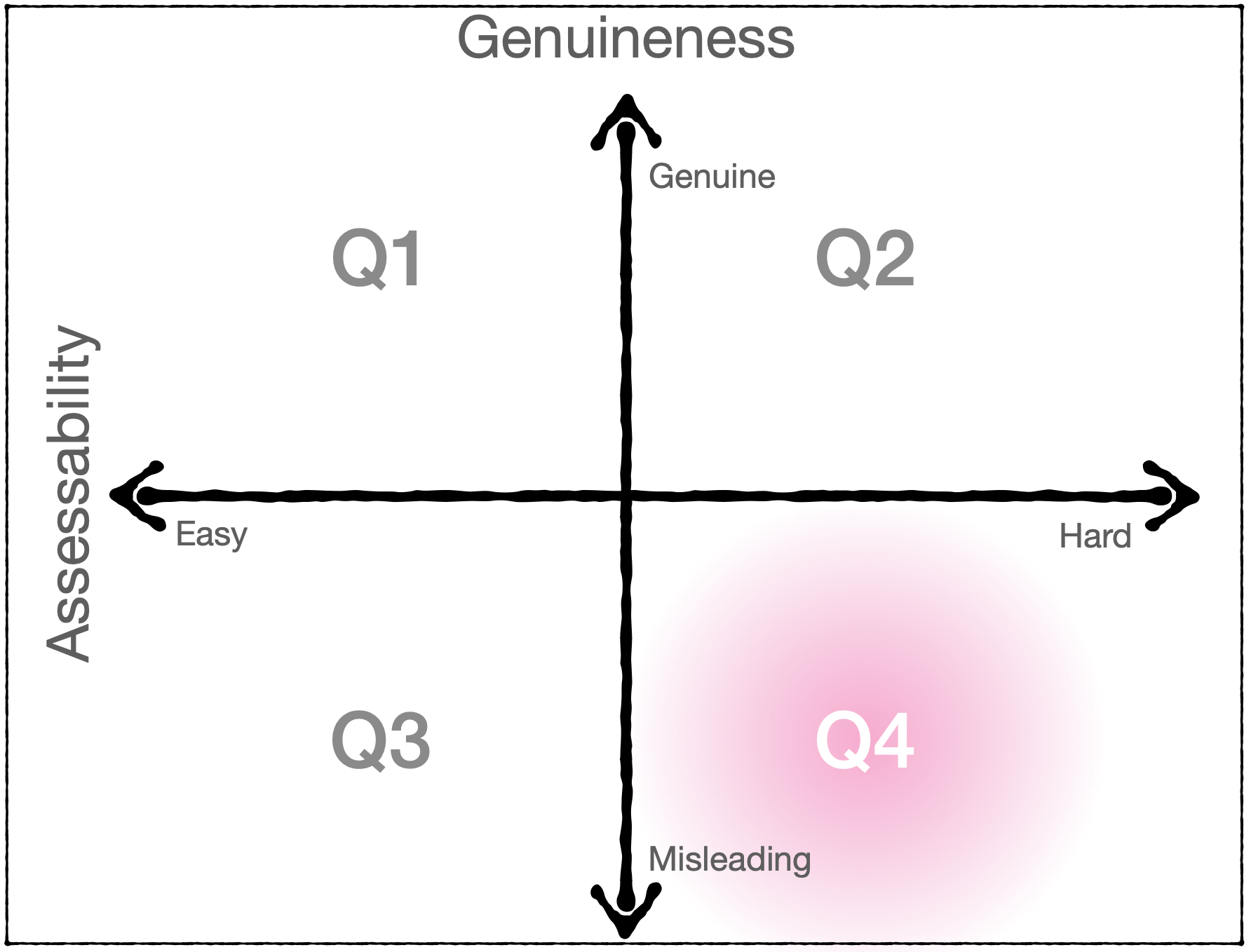

Explaining the Unexplainable: The Impact of Misleading Explanations on Trust in Unreliable Predictions for Hardly Assessable Tasks

Mersedeh Sadeghi, Daniel Pöttgen, Patrick Ebel, Andreas Vogelsang

32nd ACM Conference on User Modeling, Adaptation and Personalization (2024)

ABSTRACT

To increase trust in systems, engineers strive to create explanations that are as accurate as possible. However, if the system’s accuracy is compromised, providing explanations for its incorrect behavior may inadvertently lead to misleading explanations. This concern is particularly pertinent when the correctness of the system is difficult for users to judge. In an online survey experiment with 162 participants, we analyze the impact of misleading explanations on users’ perceived and demonstrated trust in a system that performs a hardly assessable task in an unreliable manner. Participants who used a system that provided potentially misleading explanations rated their trust significantly higher than participants who saw the system’s prediction alone. They also aligned their initial prediction with the system’s prediction significantly more often. Our findings underscore the importance of exercising caution when generating explanations, especially in tasks that are inherently difficult to evaluate. The paper and supplementary materials are available at https://doi.org/10.17605/osf.io/azu72

-

-

Generative AI and Attentive User Interfaces: Five Strategies to Enhance Take-Over Quality in Automated Driving

Patrick Ebel

Workshop on Interruptions and Attention Management: Exploring the Potential of Generative AI at MUM 2023 (2024)

ABSTRACT

As the automotive world moves toward higher levels of driving automation, Level 3 automated driving represents a critical juncture. In Level 3 driving, vehicles can drive alone under limited conditions, but drivers are expected to be ready to take over when the system requests. Assisting the driver to maintain an appropriate level of Situation Awareness (SA) in such contexts becomes a critical task. This position paper explores the potential of Attentive User Interfaces (AUIs) powered by generative Artificial Intelligence (AI) to address this need. Rather than relying on overt notifications, we argue that AUIs based on novel AI technologies such as large language models or diffusion models can be used to improve SA in an unconscious and subtle way without negative effects on drivers overall workload. Accordingly, we propose 5 strategies how generative AIs can be used to improve the quality of takeovers and, ultimately, road safety.

2023

-

-

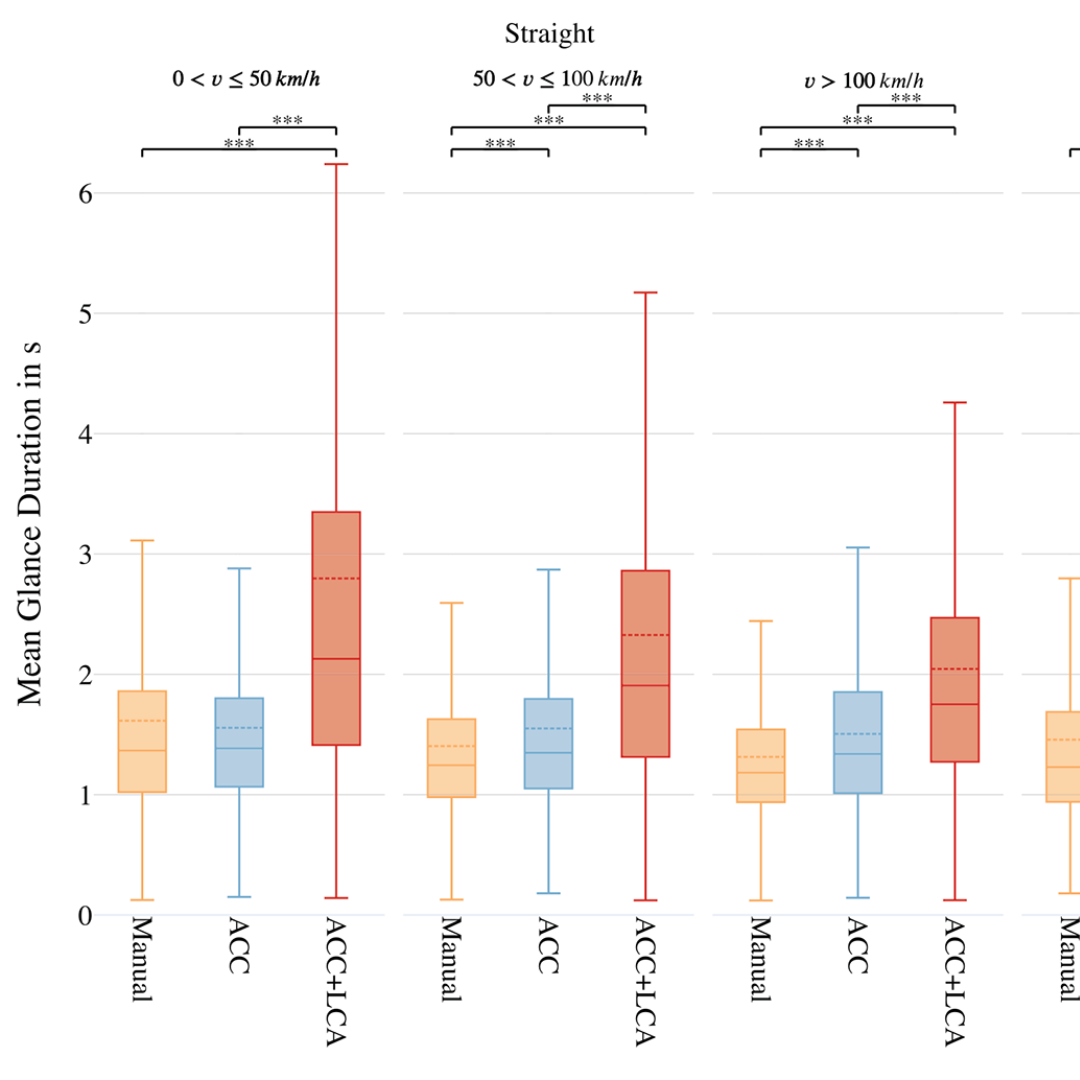

Multitasking While Driving: How Drivers Self- Regulate Their Interaction with In-Vehicle Touchscreens in Automated Driving

Patrick Ebel, Christoph Lingenfeld, Andreas Vogelsang

International Journal of Human-Computer Interaction (2023)

ABSTRACT

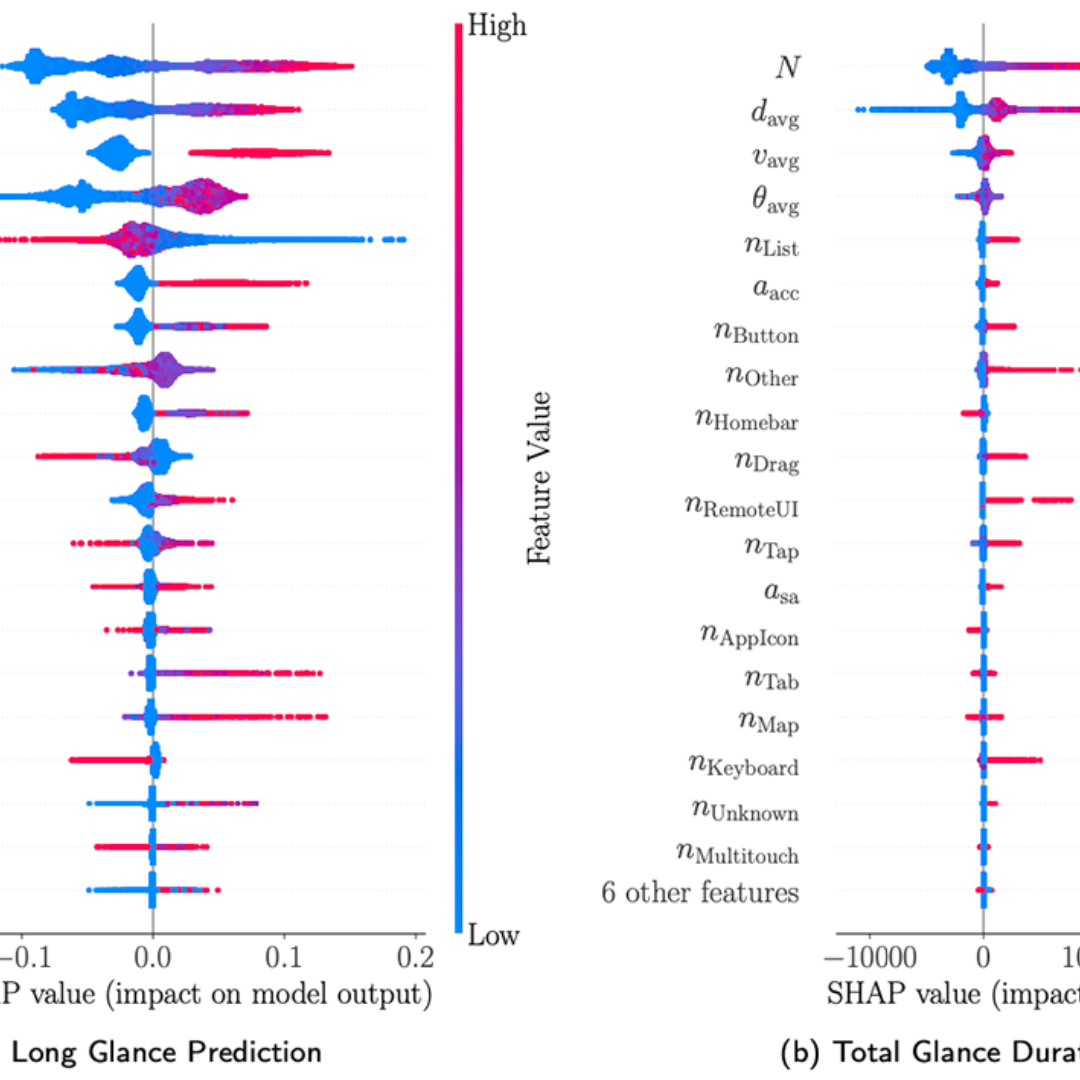

With modern infotainment systems, drivers are increasingly tempted to engage in secondary tasks while driving. Since distracted driving is already one of the main causes of fatal accidents, in-vehicle touchscreens must be as little distracting as possible. To ensure that these systems are safe to use, they undergo elaborate and expensive empirical testing, requiring fully functional prototypes. Thus, early-stage methods informing designers about the implication their design may have on driver distraction are of great value. This paper presents a machine learning method that, based on anticipated usage scenarios, predicts the visual demand of in-vehicle touchscreen interactions and provides local and global explanations of the factors influencing drivers’ visual attention allocation. The approach is based on large-scale natural driving data continuously collected from production line vehicles and employs the SHapley Additive exPlanation (SHAP) method to provide explanations leveraging informed design decisions. Our approach is more accurate than related work and identifies interactions during which long glances occur with 68 % accuracy and predicts the total glance duration with a mean error of 2.4s. Our explanations replicate the results of various recent studies and provide fast and easily accessible insights into the effect of UI elements, driving automation, and vehicle speed on driver distraction. The system can not only help designers to evaluate current designs but also help them to better anticipate and understand the implications their design decisions might have on future designs.

-

-

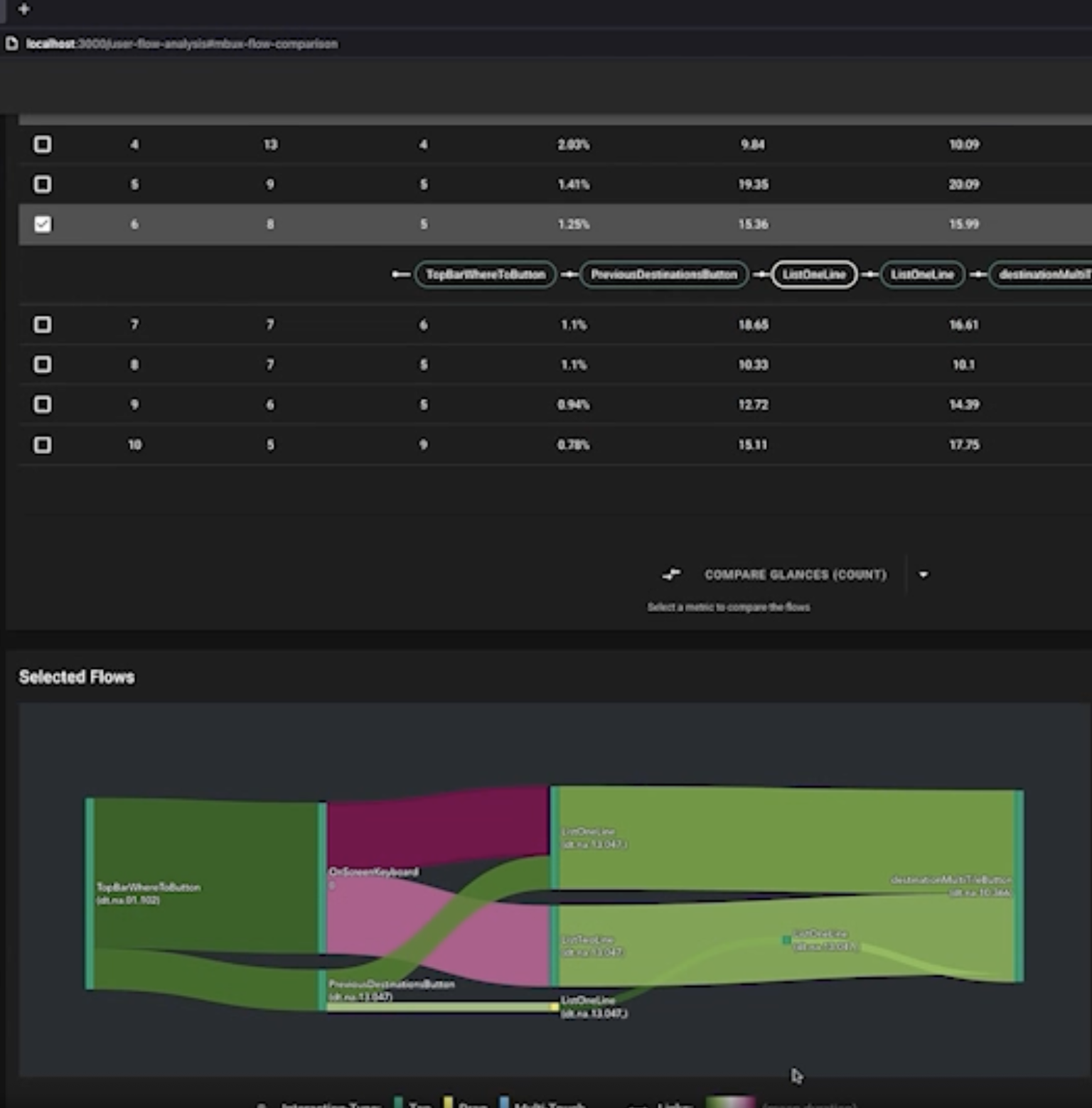

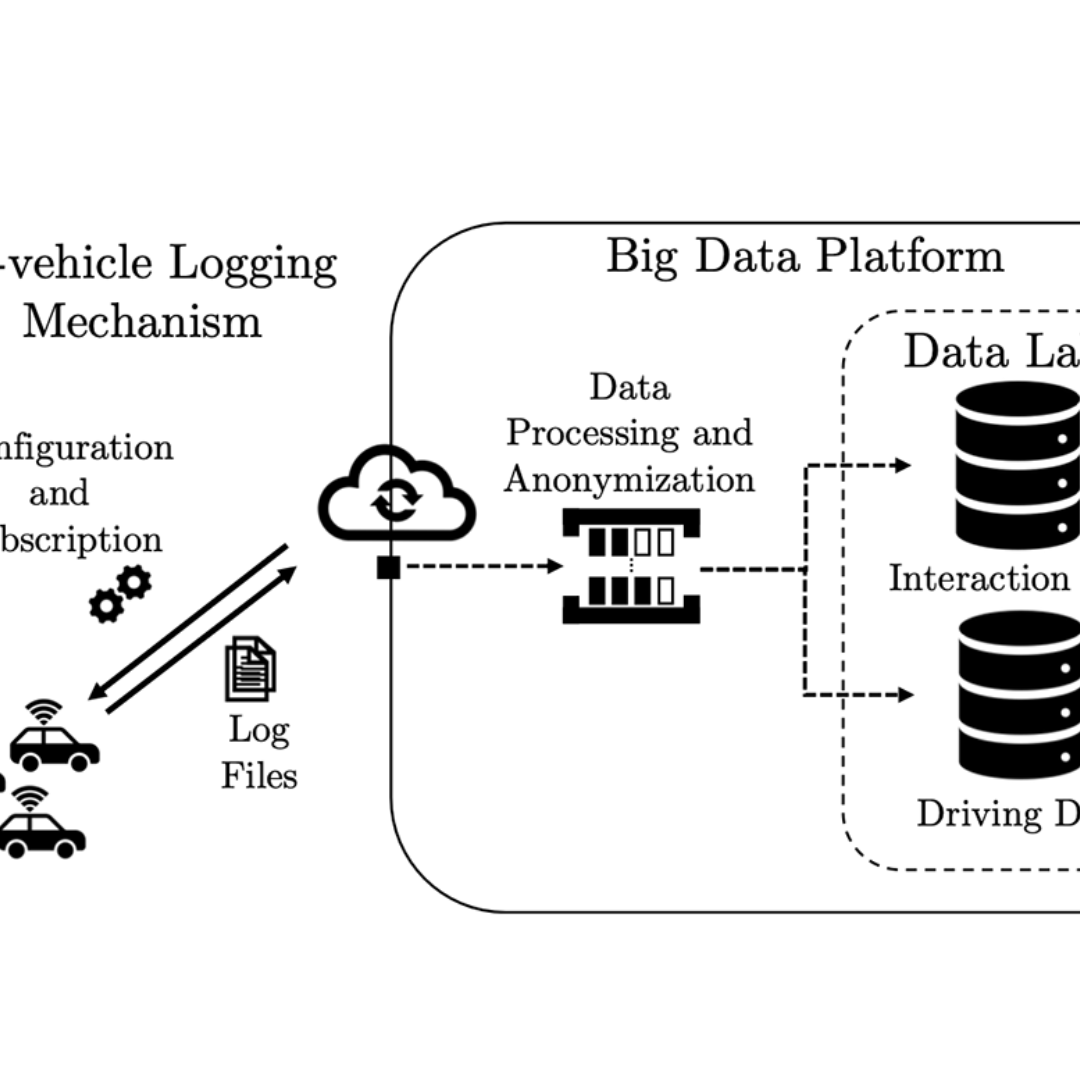

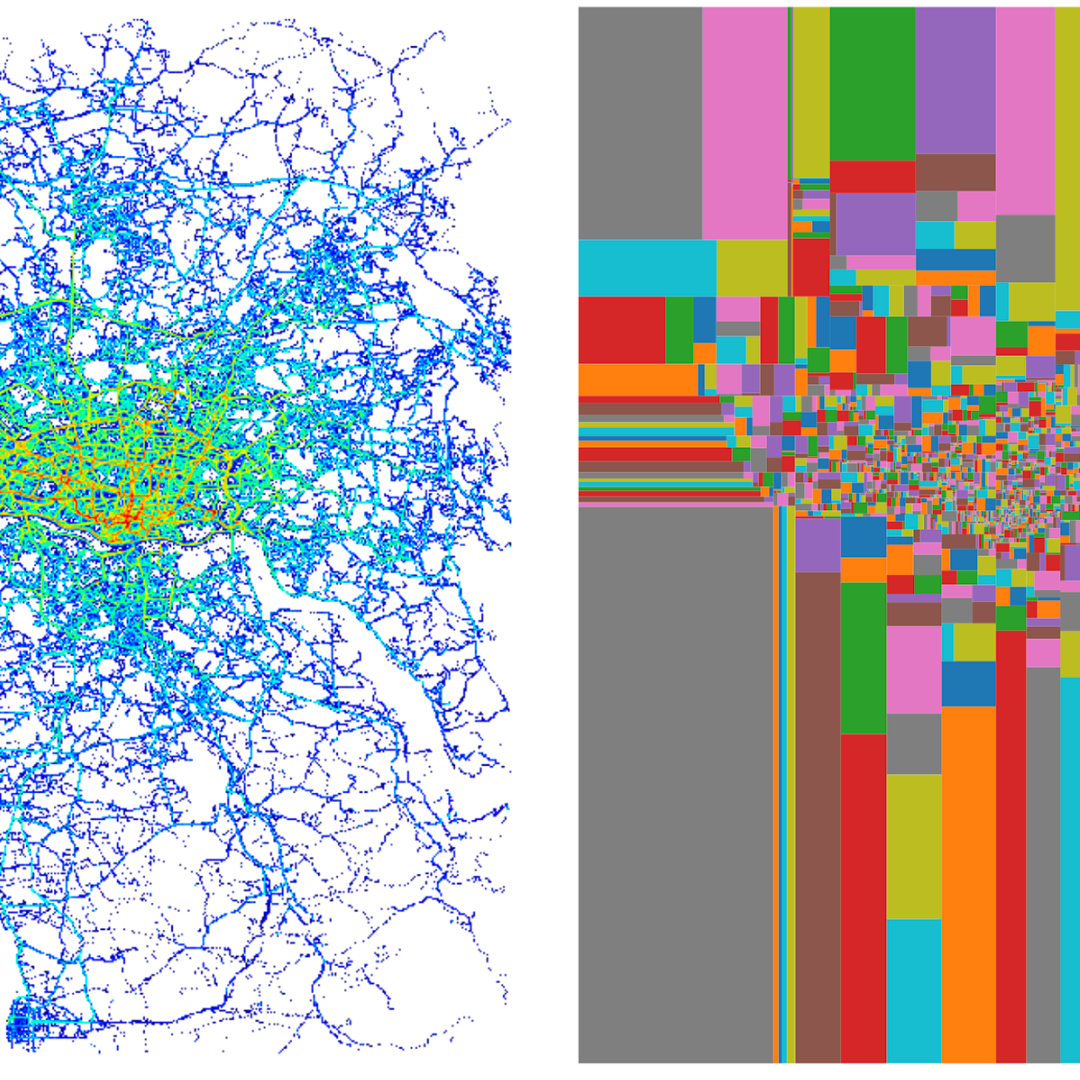

Exploring Millions of User Interactions with ICEBOAT: Big Data Analytics for Automotive User Interfaces

Patrick Ebel, Kim Julian Gülle, Christoph Lingenfeld, Andreas Vogelsang

15th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications (2023)

ABSTRACT

User Experience (UX) professionals need to be able to analyze large amounts of usage data on their own to make evidence-based design decisions. However, the design process for In-Vehicle Information Systems (IVISs) lacks data-driven support and effective tools for visualizing and analyzing user interaction data. Therefore, we pro- pose ICEBOAT1, an interactive visualization tool tailored to the needs of automotive UX experts to effectively and efficiently evalu- ate driver interactions with IVISs. ICEBOAT visualizes telematics data collected from production line vehicles, allowing UX experts to perform task-specific analyses. Following a mixed methods User- Centered Design (UCD) approach, we conducted an interview study (N=4) to extract the domain specific information and interaction needs of automotive UX experts and used a co-design approach (N=4) to develop an interactive analysis tool. Our evaluation (N=12) shows that ICEBOAT enables UX experts to efficiently generate knowledge that facilitates data-driven design decisions.

-

-

Data-Driven Evaluation of In-Vehicle Information Systems

Patrick Ebel

Dissertation (2023)

ABSTRACT

Today’s In-Vehicle Information Systems (IVISs) are feature-rich systems that provide the driver with numerous options for entertainment, information, comfort, and commu- nication. Drivers can stream their favorite songs, read reviews of nearby restaurants, or change the ambient lighting to their liking. To do so, they interact with large center stack touchscreens that have become the main interface between the driver and IVISs. To interact with these systems, drivers must take their eyes off the road which can im- pair their driving performance. This makes IVIS evaluation critical not only to meet customer needs but also to ensure road safety. The growing number of features, the dis- traction caused by large touchscreens, and the impact of driving automation on driver behavior pose significant challenges for the design and evaluation of IVISs. Tradition- ally, IVISs are evaluated qualitatively or through small-scale user studies using driving simulators. However, these methods are not scalable to the growing number of features and the variety of driving scenarios that influence driver interaction behavior. We ar- gue that data-driven methods can be a viable solution to these challenges and can assist automotive User Experience (UX) experts in evaluating IVISs. Therefore, we need to understand how data-driven methods can facilitate the design and evaluation of IVISs, how large amounts of usage data need to be visualized, and how drivers allocate their visual attention when interacting with center stack touchscreens. In Part I, we present the results of two empirical studies and create a comprehensive understanding of the role that data-driven methods currently play in the automotive UX design process. We found that automotive UX experts face two main conflicts: First, results from qualitative or small-scale empirical studies are often not valued in the decision-making process. Second, UX experts often do not have access to customer data and lack the means and tools to analyze it appropriately. As a result, design decisions are often not user-centered and are based on subjective judgments rather than evidence-based customer insights. Our results show that automotive UX experts need data-driven methods that leverage large amounts of telematics data collected from customer vehicles. They need tools to help them visualize and analyze customer usage data and computational methods to automatically evaluate IVIS designs. In Part II, we present ICEBOAT, an interactive user behavior analysis tool for automotive user interfaces. ICEBOAT processes interaction data, driving data, and glance data, collected over-the-air from customer vehicles and visualizes it on different levels of granularity. Leveraging our multi-level user behavior analysis framework, it enables UX experts to effectively and efficiently evaluate driver interactions with touchscreen-based IVISs concerning performance and safety-related metrics. In Part III, we investigate drivers’ multitasking behavior and visual attention alloca- tion when interacting with center stack touchscreens while driving. We present the first naturalistic driving study to assess drivers’ tactical and operational self-regulation with center stack touchscreens. Our results show significant differences in drivers’ interaction and glance behavior in response to different levels of driving automation, vehicle speed, and road curvature. During automated driving, drivers perform more interactions per touchscreen sequence and increase the time spent looking at the center stack touchscreen. These results emphasize the importance of context-dependent driver distraction assess- ment of driver interactions with IVISs. Motivated by this we present a machine learning- based approach to predict and explain the visual demand of in-vehicle touchscreen inter- iii actions based on customer data. By predicting the visual demand of yet unseen touchscreen interactions, our method lays the foundation for automated data-driven evaluation of early-stage IVIS prototypes. The local and global explanations provide additional insights into how design artifacts and driving context affect drivers’ glance behavior. Overall, this thesis identifies current shortcomings in the evaluation of IVISs and proposes novel solutions based on visual analytics and statistical and computational modeling that generate insights into driver interaction behavior and assist UX experts in making user-centered design decisions.

-

-

On the forces of driver distraction: Explainable predictions for the visual demand of in-vehicle touchscreen interactions

Patrick Ebel, Christoph Lingenfeld, Andreas Vogelsang

Accident Analysis & Prevention (2023)

ABSTRACT

With modern infotainment systems, drivers are increasingly tempted to engage in secondary tasks while driving. Since distracted driving is already one of the main causes of fatal accidents, in-vehicle touchscreens must be as little distracting as possible. To ensure that these systems are safe to use, they undergo elaborate and expensive empirical testing, requiring fully functional prototypes. Thus, early-stage methods informing designers about the implication their design may have on driver distraction are of great value. This paper presents a machine learning method that, based on anticipated usage scenarios, predicts the visual demand of in-vehicle touchscreen interactions and provides local and global explanations of the factors influencing drivers’ visual attention allocation. The approach is based on large-scale natural driving data continuously collected from production line vehicles and employs the SHapley Additive exPlanation (SHAP) method to provide explanations leveraging informed design decisions. Our approach is more accurate than related work and identifies interactions during which long glances occur with 68 % accuracy and predicts the total glance duration with a mean error of 2.4s. Our explanations replicate the results of various recent studies and provide fast and easily accessible insights into the effect of UI elements, driving automation, and vehicle speed on driver distraction. The system can not only help designers to evaluate current designs but also help them to better anticipate and understand the implications their design decisions might have on future designs.

2022

-

-

How Do Drivers Self-Regulate their Secondary Task Engagements? The Effect of Driving Automation on Touchscreen Interactions and Glance Behavior

Patrick Ebel, Moritz Berger, Christoph Lingenfeld, Andreas Vogelsang

14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2022)

ABSTRACT

With ever-improving driver assistance systems and large touch- screens becoming the main in-vehicle interface, drivers are more tempted than ever to engage in distracting non-driving-related tasks. However, little research exists on how driving automation affects drivers’ self-regulation when interacting with center stack touchscreens. To investigate this, we employ multilevel models on a real-world driving dataset consisting of 10,139 sequences. Our re- sults show significant differences in drivers’ interaction and glance behavior in response to varying levels of driving automation, vehi- cle speed, and road curvature. During partially automated driving, drivers are not only more likely to engage in secondary touchscreen tasks, but their mean glance duration toward the touchscreen also increases by 12 % (Level 1) and 20 % (Level 2) compared to manual driving. We further show that the effect of driving automation on drivers’ self-regulation is larger than that of vehicle speed and road curvature. The derived knowledge can facilitate the safety evalua- tion of infotainment systems and the development of context-aware driver monitoring systems.

2021

-

-

Visualizing Event Sequence Data for User Behavior Evaluation of In-Vehicle Information Systems

Patrick Ebel, Christoph Lingenfeld, Andreas Vogelsang

13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2021)

ABSTRACT

With modern In-Vehicle Information Systems (IVISs) becoming more capable and complex than ever, their evaluation becomes in- creasingly difficult. The analysis of large amounts of user behavior data can help to cope with this complexity and can support UX experts in designing IVISs that serve customer needs and are safe to operate while driving. We, therefore, propose a Multi-level User Behavior Visualization Framework providing effective visualiza- tions of user behavior data that is collected via telematics from production vehicles. Our approach visualizes user behavior data on three different levels: (1) The Task Level View aggregates event sequence data generated through touchscreen interactions to vi- sualize user flows. (2) The Flow Level View allows comparing the individual flows based on a chosen metric. (3) The Sequence Level View provides detailed insights into touch interactions, glance, and driving behavior. Our case study proves that UX experts consider our approach a useful addition to their design process.

-

-

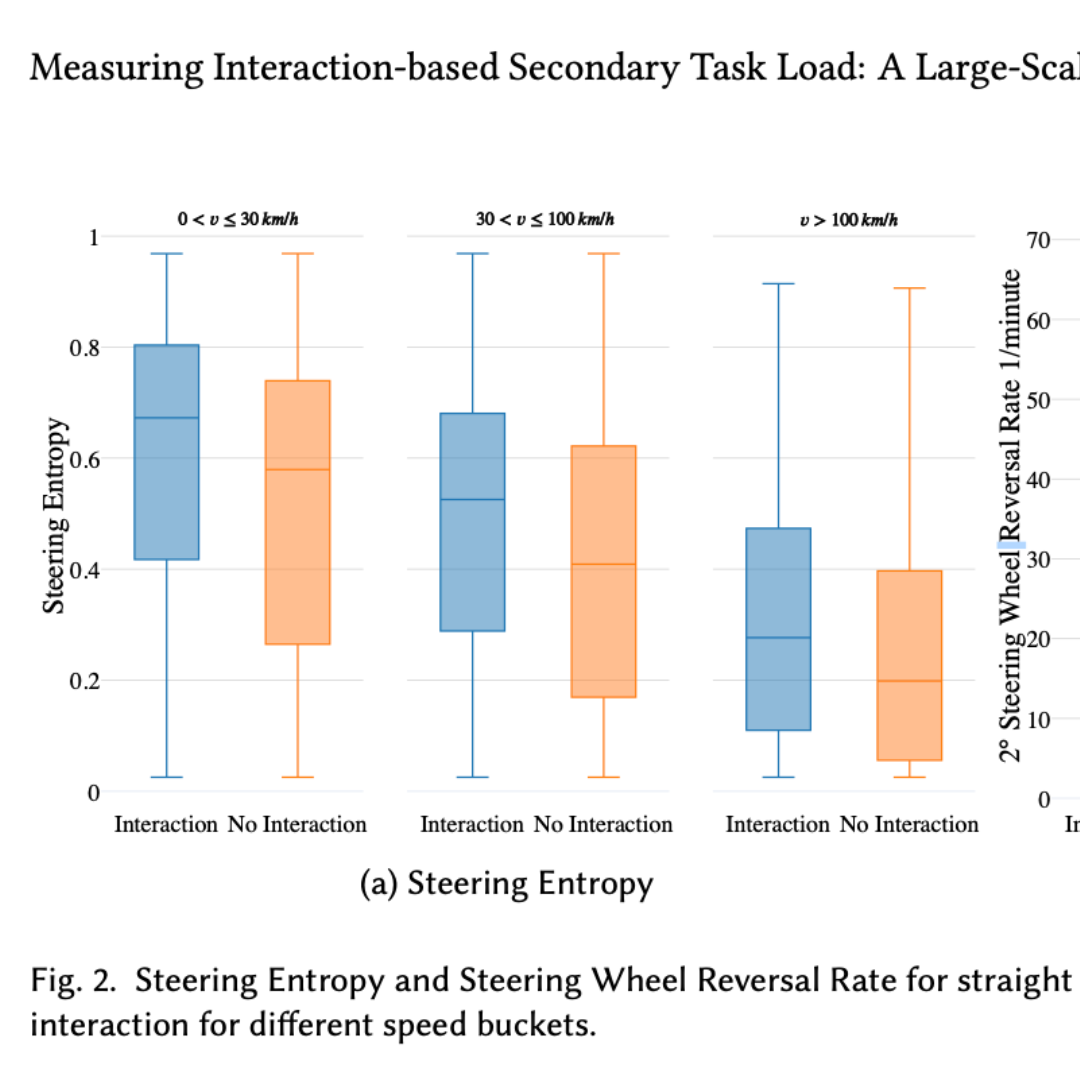

Measuring Interaction-based Secondary Task Load: A Large-Scale Approach using Real-World Driving Data

Patrick Ebel, Christoph Lingenfeld, Andreas Vogelsang

12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2021)

ABSTRACT

With ever-improving driver assistance systems and large touch- screens becoming the main in-vehicle interface, drivers are more tempted than ever to engage in distracting non-driving-related tasks. However, little research exists on how driving automation affects drivers’ self-regulation when interacting with center stack touchscreens. To investigate this, we employ multilevel models on a real-world driving dataset consisting of 10,139 sequences. Our re- sults show significant differences in drivers’ interaction and glance behavior in response to varying levels of driving automation, vehi- cle speed, and road curvature. During partially automated driving, drivers are not only more likely to engage in secondary touchscreen tasks, but their mean glance duration toward the touchscreen also increases by 12 % (Level 1) and 20 % (Level 2) compared to manual driving. We further show that the effect of driving automation on drivers’ self-regulation is larger than that of vehicle speed and road curvature. The derived knowledge can facilitate the safety evalua- tion of infotainment systems and the development of context-aware driver monitoring systems.

-

-

Automotive UX design and data-driven development: Narrowing the gap to support practitioners

Patrick Ebel, Julia Orlovska, Sebastian Hünemeyer, Casper Wickman, Andreas Vogelsang, Rikard Soederberg

Transportation Research Interdisciplinary Perspectives (2021)

ABSTRACT

The development and evaluation of In-Vehicle Information Systems (IVISs) is strongly based on insights from qualitative studies conducted in artificial contexts (e.g., driving simulators or lab experiments). However, the growing complexity of the systems and the uncertainty about the context in which they are used, create a need to augment qualitative data with quantitative data, collected during real-world driving. In contrast to many digital companies that are already successfully using data-driven methods, Original Equipment Manufacturers (OEMs) are not yet succeeding in releasing the potentials such methods offer. We aim to understand what prevents automotive OEMs from applying data-driven methods, what needs practitioners formulate, and how collecting and analyzing usage data from vehicles can enhance UX activities. We adopted a Multiphase Mixed Methods approach comprising two interview studies with more than 15 UX practitioners and two action research studies conducted with two different OEMs. From the four studies, we synthesize the needs of UX designers, extract limitations within the domain that hinder the application of data-driven methods, elaborate on unleveraged potentials, and formulate recommendations to improve the usage of vehicle data. We conclude that, in addition to modernizing the legal, technical, and organizational infrastructure, UX and Data Science must be brought closer together by reducing silo mentality and increasing interdisciplinary collaboration. New tools and methods need to be developed and UX experts must be empowered to make data-based evidence an integral part of the UX design process.

-

-

The Role and Potentials of Field User Interaction Data in the Automotive UX Development Lifecycle: An Industry Perspective

Patrick Ebel, Christoph Lingenfeld, Andreas Vogelsang

12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (2021)

ABSTRACT

We are interested in the role of field user interaction data in the de- velopment of In-Vehicle Information Systems (IVISs), the potentials practitioners see in analyzing this data, the concerns they share, and how this compares to companies with digital products. We conducted interviews with 14 UX professionals, 8 from automotive and 6 from digital companies, and analyzed the results by emergent thematic coding. Our key findings indicate that implicit feedback through field user interaction data is currently not evident in the automotive UX development process. Most decisions regarding the design of IVISs are made based on personal preferences and the intuitions of stakeholders. However, the interviewees also indicated that user interaction data has the potential to lower the influence of guesswork and assumptions in the UX design process and can help to make the UX development lifecycle more evidence-based and user-centered.

2020

-

-

Destination Prediction Based on Partial Trajectory Data

Patrick Ebel, Ibrahim Emre Göl, Christoph Lingenfelder, Andreas Vogelsang

IEEE Intelligent Vehicles Symposium (2020)

ABSTRACT

Two-thirds of the people who buy a new car prefer to use a substitute instead of the built-in navigation system. However, for many applications, knowledge about a users intended destination and route is crucial. For example, suggestions for available parking spots close to the destination can be made or ride-sharing opportunities along the route are facilitated. Our approach predicts probable destinations and routes of a vehicle, based on the most recent partial trajectory and additional contextual data. The approach follows a three- step procedure: First, a k-d tree-based space discretization is performed, mapping GPS locations to discrete regions. Secondly, a recurrent neural network is trained to predict the destination based on partial sequences of trajectories. The neural network produces destination scores, signifying the prob- ability of each region being the destination. Finally, the routes to the most probable destinations are calculated. To evaluate the method, we compare multiple neural architectures and present the experimental results of the destination prediction. The experiments are based on two public datasets of non- personalized, timestamped GPS locations of taxi trips. The best performing models were able to predict the destination of a vehicle with a mean error of 1.3 km and 1.43 km respectively.